Introduction

Bayesian inference is a statistical approach based on Bayes’ theorem, which describes how to update beliefs based on new evidence. This approach provides a framework for reasoning about probabilities in the presence of uncertainty.

Bayes’ theorem states that:

where:

- is the posterior probability of hypothesis given evidence .

- is the likelihood of observing evidence given that hypothesis is true.

- is the prior probability of hypothesis before observing evidence .

- is the probability of evidence occurring.

This document explores Bayesian methods for risk probability and cost probability density estimation.

Inference for Risk Probability

Consider a risk event that may be caused by multiple root causes . The probability of occurring can be computed as:

where:

- represents the probability of occurring given that is present.

- is the prior probability of the root cause .

- is the probability of occurring when is absent.

- is the probability that does not occur.

The function risk_prob calculates the probability of the

risk event given the root causes and their conditional

probabilities.

Example

First, load the package:

Suppose there are two root causes with probabilities and .

cause_probs <- c(0.3, 0.2)The conditional probabilities of the risk event given each cause are and , respectively. The conditional probabilities of the risk event given not each cause are and .

To calculate the probability of the risk event, use the

risk_prob function:

risk_prob_value <- risk_prob(cause_probs, risks_given_causes, risks_given_not_causes)

cat(risk_prob_value)0.82

Inference for Cost Probability Density

The cost_pdf function uses Bayesian inference to model

the probability distribution of cost outcomes based on the occurrence of

risk events. It assumes that each risk event contributes to the total

cost according to a normal distribution, leading to a mixture model

representation:

where:

- is the probability of risk event .

- is the normal distribution with mean and standard deviation .

- is the probability that risk event does not occur.

- is a point mass at the baseline cost .

The function cost_pdf generates random samples from the

mixture model to estimate the cost distribution.

Example

Suppose there are three risk events with probabilities , , and .

risk_probs <- c(0.3, 0.5, 0.2)The means and standard deviations of the normal distributions for cost given each risk event are:

The baseline cost is .

base_cost <- 2000To generate random samples from the cost distribution, use the

cost_pdf function:

num_sims <- 1000

samples <- cost_pdf(num_sims, risk_probs, means_given_risks, sds_given_risks, base_cost)

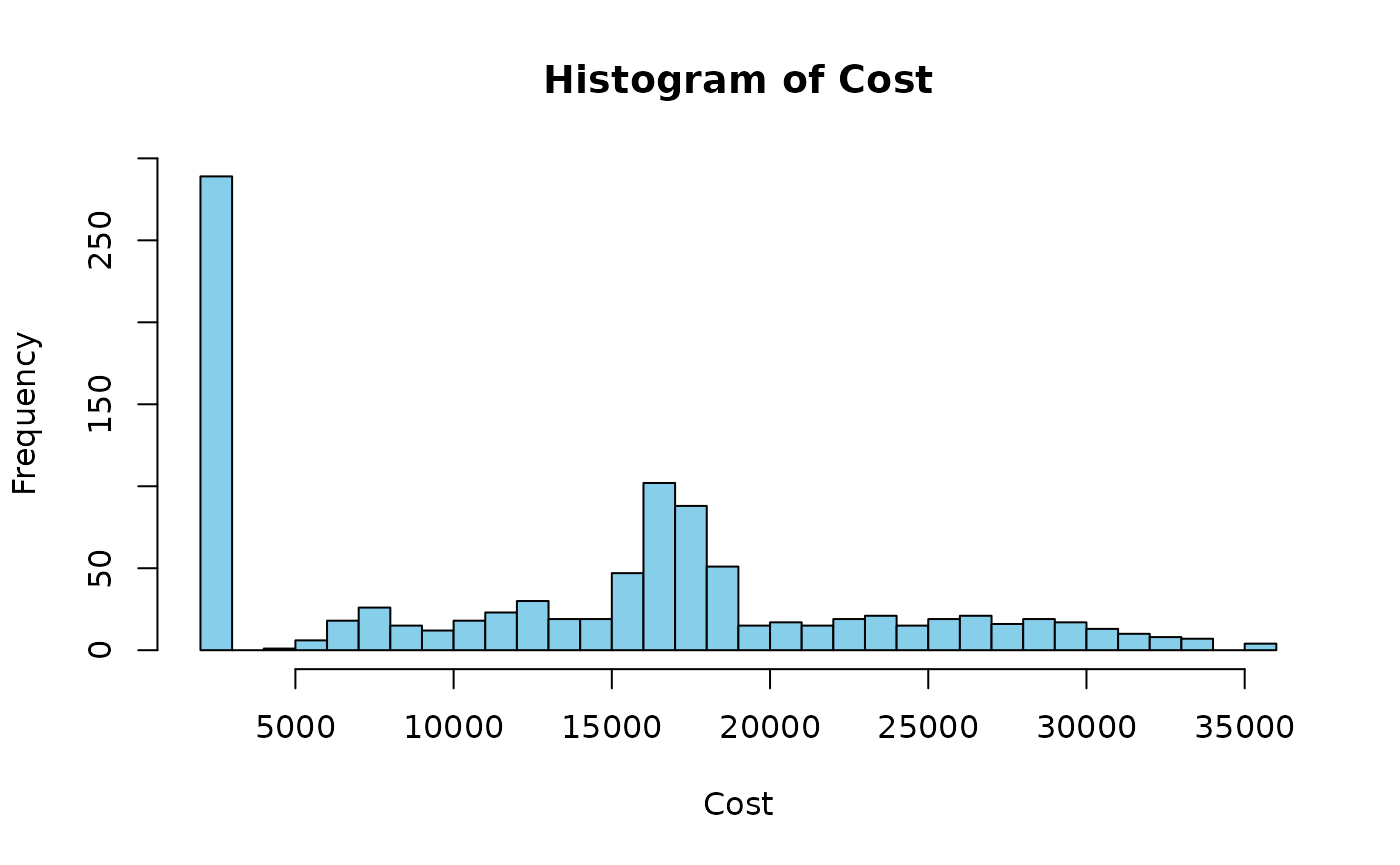

hist(samples, breaks = 30, col = "skyblue", main = "Histogram of Cost", xlab = "Cost")

The histogram above shows the distribution of cost outcomes based on the risk events and their associated costs.

Posterior Risk Probability

Bayesian updating is the process of updating prior beliefs given new evidence. The risk_post_prob function calculates the posterior probability of a risk event given observations of its root causes. This is achieved by applying Bayes’ theorem to update the prior probabilities of root causes based on the observed data.

Example

Suppose there are two root causes with prior probabilities and .

cause_probs <- c(0.3, 0.2)The conditional probabilities of the risk event given each cause are and , respectively. The conditional probabilities of the risk event given not each cause are and .

Suppose the observed root causes are and .

observed_causes <- c(1, NA)To calculate the posterior probability of the risk event, use the

risk_post_prob function:

risk_post_prob <- risk_post_prob(

cause_probs, risks_given_causes,

risks_given_not_causes, observed_causes

)

cat(risk_post_prob)0.6315789

The posterior probability of the risk event is updated based on the observed root causes.

Posterior Cost Probability Density

The cost_post_pdf function generates a posterior

probability density function (PDF) for costs, given observed risk

events. This function simulates random samples from a mixture model

based on Bayesian updating principles.

Example

Suppose there are three risk events with observed values , , and .

observed_risks <- c(1, NA, 1)The means and standard deviations of the normal distributions for cost given each risk event are:

The baseline cost is .

base_cost <- 2000To generate random samples from the posterior cost distribution, use

the cost_post_pdf function:

num_sims <- 1000

posterior_samples <- cost_post_pdf(

num_sims = num_sims,

observed_risks = observed_risks,

means_given_risks = means_given_risks,

sds_given_risks = sds_given_risks,

base_cost = base_cost

)

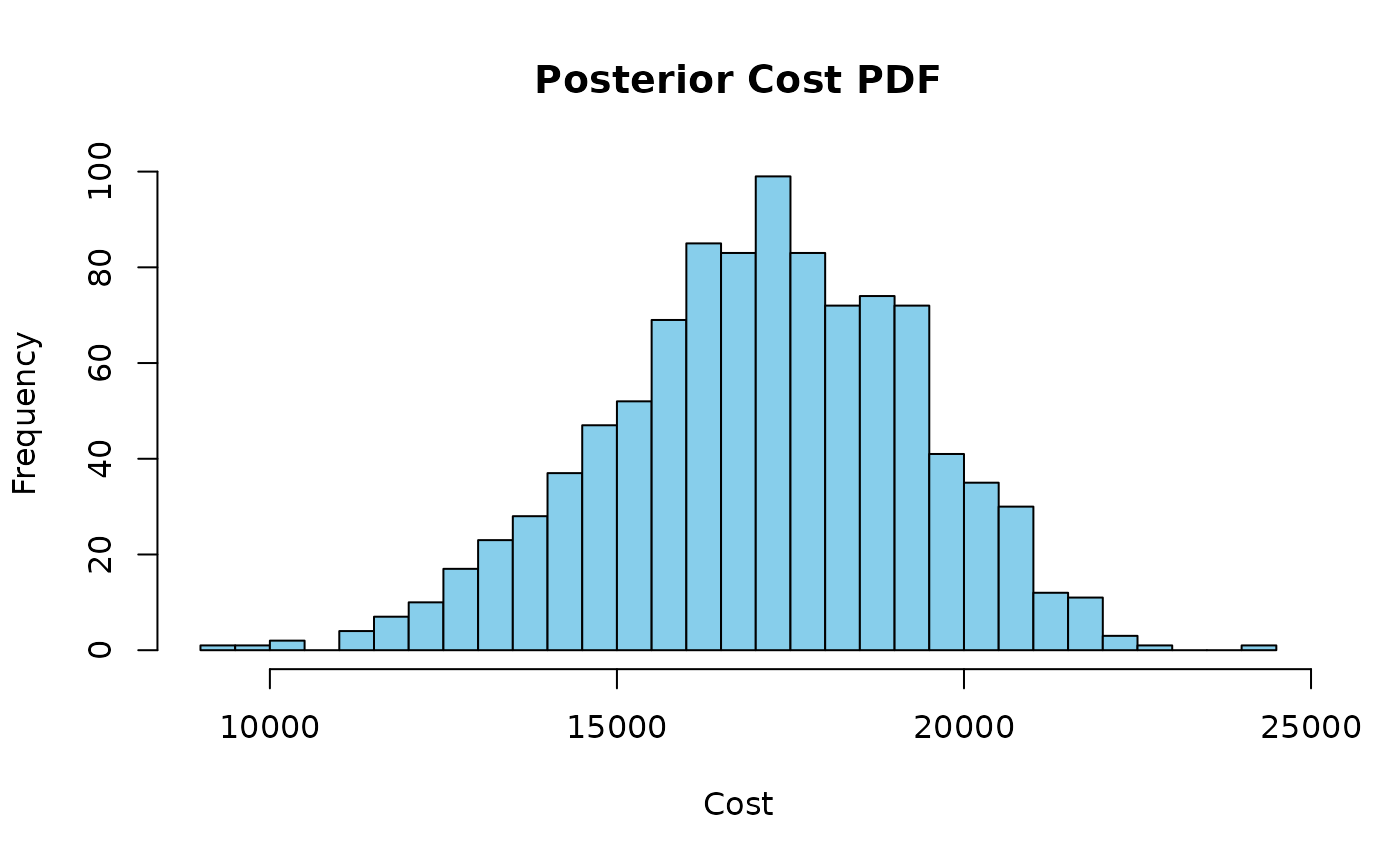

hist(posterior_samples, breaks = 30, col = "skyblue", main = "Posterior Cost PDF", xlab = "Cost")

The histogram above shows the posterior probability density function of costs based on the observed risk events.